Stable-Diffusion videos- cuda version 100% working

Stable-Diffusion videos- cuda version 100% working

Full guide on how to implement sd 1.5 in cuda and Stable-Diffusion Walk Pipeline for sd videos.

Hi everyone, real quick this is the continuation of my previous article stable-diffusion using Jax/FAX! for TPU implementation.

Article Link: https://bobrupakroy.medium.com/awsum-stable-diffusion-1-4-in-kaggle-a47214201580

Of course, TPUs are costly and not made for personal use. So, today we will see how to apply stable-diffusion using cuda, thought cuda will easier as all do have Nvidia Graphics but even if we don't have it No Worries! i got that covered too.

We will use Kaggle’s TESLA GPU to perform our stable diffusion for free of course. So lets get started.

#install the diffuser package

#pip install --upgrade pip

!pip install --upgrade diffusers transformers scipy

#load the model from stable-diffusion model card

import torch

from diffusers import StableDiffusionPipeline

from huggingface_hub import notebook_loginModel Loading

Weights are available in 🤗 Hub as part of the Stable Diffusion repo. The Stable Diffusion model is distributed under the CreateML OpenRail-M license. It’s an open license that claims no rights on the outputs you generate and prohibits you from deliberately producing illegal or harmful content. The model card provides more details, so take a moment to read them and consider carefully whether you accept the license.

https://huggingface.co/CompVis/stable-diffusion-v1-4

If you do, you need to be a registered user in 🤗 Hugging Face Hub and use an access token for the code to work. You have two options to provide your access token:

- Use the

huggingface-cli logincommand-line tool in your terminal and paste your token when prompted. It will be saved in a file in your computer. - Or use

notebook_login()in a notebook, which does the same thing.

The following cell will present a login interface unless you’ve already authenticated before in this computer. You’ll need to paste your access token.

if not (Path.home()/'.huggingface'/'token').exists(): notebook_login()model_id = "CompVis/stable-diffusion-v1-4"

device = "cuda"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to(device)To show a single image

%%time

#Provide the Keywords

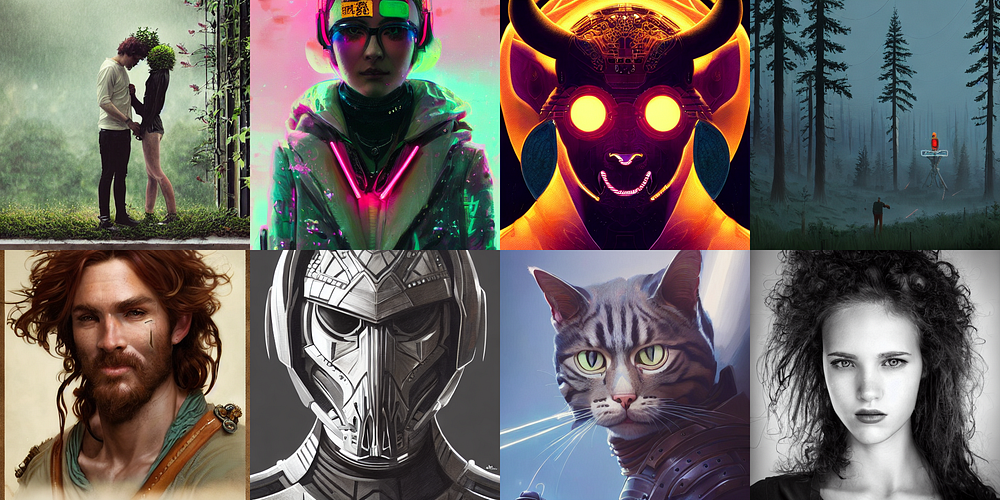

prompts = [

"a couple holding hands with plants growing out of their heads, growth of a couple, rainy day, atmospheric, bokeh matte masterpiece by artgerm by wlop by alphonse muhca ",

"detailed portrait beautiful Neon Operator Girl, cyberpunk futuristic neon, reflective puffy coat, decorated with traditional Japanese ornaments by Ismail inceoglu dragan bibin hans thoma greg rutkowski Alexandros Pyromallis Nekro Rene Maritte Illustrated, Perfect face, fine details, realistic shaded, fine-face, pretty face",

"symmetry!! portrait of minotaur, sci - fi, glowing lights!! intricate, elegant, highly detailed, digital painting, artstation, concept art, smooth, sharp focus, illustration, art by artgerm and greg rutkowski and alphonse mucha, 8 k ",

"Human, Simon Stalenhag in forest clearing style, trends on artstation, artstation HD, artstation, unreal engine, 4k, 8k",

"portrait of a young ruggedly handsome but joyful pirate, male, masculine, upper body, red hair, long hair, d & d, fantasy, roguish smirk, intricate, elegant, highly detailed, digital painting, artstation, concept art, matte, sharp focus, illustration, art by artgerm and greg rutkowski and alphonse mucha ",

"Symmetry!! portrait of a sith lord, warrior in sci-fi armour, tech wear, muscular!! sci-fi, intricate, elegant, highly detailed, digital painting, artstation, concept art, smooth, sharp focus, illustration, art by artgerm and greg rutkowski and alphonse mucha",

"highly detailed portrait of a cat knight wearing heavy armor, stephen bliss, unreal engine, greg rutkowski, loish, rhads, beeple, makoto shinkai and lois van baarle, ilya kuvshinov, rossdraws, tom bagshaw, tom whalen, alphonse mucha, global illumination, god rays, detailed and intricate environment ",

"black and white portrait photo, the most beautiful girl in the world, earth, year 2447, cdx"

]%%time

#show the results

images = pipe(prompts).images

images

#show a single result

images[0]

And to show a grid of image

#show the results in grid

from PIL import Image

def image_grid(imgs, rows, cols):

w,h = imgs[0].size

grid = Image.new('RGB', size=(cols*w, rows*h))

for i, img in enumerate(imgs): grid.paste(img, box=(i%cols*w, i//cols*h))

return grid

grid = image_grid(images, rows=2, cols=4)

grid

#Save the results

grid.save("result_images.png")

Note: If you are limited by GPU memory and have less than 4GB of GPU RAM available, please make sure to load the StableDiffusionPipeline in float16 precision instead of the default float32 precision as done above. You can do so by telling diffusers to expect the weights to be in float16 precision:

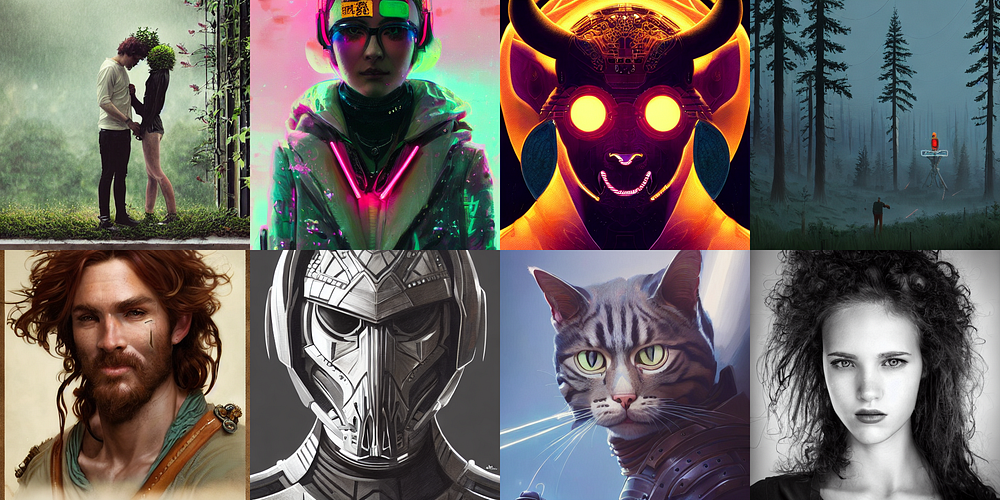

%%time

import torch

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to(device)

pipe.enable_attention_slicing()

images2 = pipe(prompts)

images2[0]

grid2 = image_grid(images, rows=2, cols=4)

grid2

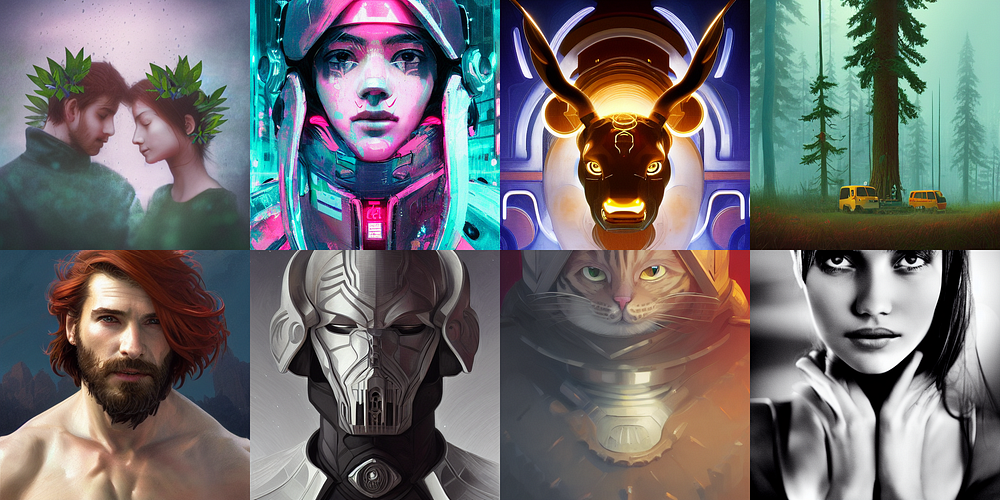

To swap out the noise scheduler, pass it to from_pretrained:

%%time

from diffusers import StableDiffusionPipeline, EulerDiscreteScheduler

model_id = "CompVis/stable-diffusion-v1-4"

# Use the Euler scheduler here instead

scheduler = EulerDiscreteScheduler.from_pretrained(model_id, subfolder="scheduler")

pipe = StableDiffusionPipeline.from_pretrained(model_id, scheduler=scheduler, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

images3 = pipe(prompts)

images3[0][0]

#save the final output

grid3.save("results_stable_diffusionv1.4.png")

#results are saved in tuple

images3[0][0]

grid3 = image_grid(images3[0], rows=2, cols=4)

grid3

#save the final output

grid3.save("results_stable_diffusionv1.4.png")

Tutorial Video: https://youtu.be/ZnGM5oua3sc

Now let’s create a video using helping of interpolation.

Go to the Kaggle Notebook Settings: Select Accelerator GPU100 or any,

then install the required package

pip install -U stable_diffusion_videos

from huggingface_hub import notebook_login

notebook_login()#Making Videos

from stable_diffusion_videos import StableDiffusionWalkPipeline

import torch

#"CompVis/stable-diffusion-v1-4" for 1.4

pipeline = StableDiffusionWalkPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5",

torch_dtype=torch.float16,

revision="fp16",

).to("cuda")#Generate the video Prompts 1

video_path = pipeline.walk(

prompts=['A detailed infographic, marginalia titled ‘Face of sadness’ description ‘Order of the occult princess’ portrait, character design, worn, dark, manga style, extremely high detail, photo realistic, pen and ink, intricate line drawings, by MC Escher, Yoshitaka Amano, Ruan Jia, Kentaro Miura, Artgerm, style by eddie mendoza, raphael lacoste, alex ross',

'A detailed infographic, marginalia titled ‘Face of sadness’ description ‘Order of the occult princess’ portrait, character design, worn, dark, manga style, extremely high detail, photo realistic, pen and ink, intricate line drawings, by MC Escher, Yoshitaka Amano, Ruan Jia, Kentaro Miura, Artgerm, style by eddie mendoza, raphael lacoste, alex ross',

'A detailed infographic, marginalia titled ‘Face of sadness’ description ‘Order of the occult princess’ portrait, character design, worn, dark, manga style, extremely high detail, photo realistic, pen and ink, intricate line drawings, by MC Escher, Yoshitaka Amano, Ruan Jia, Kentaro Miura, Artgerm, style by eddie mendoza, raphael lacoste, alex ross',

'A detailed infographic, marginalia titled ‘Face of sadness’ description ‘Order of the occult princess’ portrait, character design, worn, dark, manga style, extremely high detail, photo realistic, pen and ink, intricate line drawings, by MC Escher, Yoshitaka Amano, Ruan Jia, Kentaro Miura, Artgerm, style by eddie mendoza, raphael lacoste, alex ross'],

seeds=[2001,3001,4001,50001],

num_interpolation_steps=50,

#height=1280, # use multiples of 64 if > 512. Multiples of 8 if < 512.

#width=720, # use multiples of 64 if > 512. Multiples of 8 if < 512.

output_dir='dreams', # Where images/videos will be saved

name='imagine', # Subdirectory of output_dir where images/videos will be saved

guidance_scale=12.5, # Higher adheres to prompt more, lower lets model take the wheel

num_inference_steps=50, # Number of diffusion steps per image generated. 50 is good default

)Results: https://youtu.be/gxLc5Ox8fFU

SCALE UP THE IMAGE AT 4K, so that you can use the sequence of images for creating your own video

#upScale 4X

from stable_diffusion_videos import RealESRGANModel

model = RealESRGANModel.from_pretrained('nateraw/real-esrgan')

model.upsample_imagefolder('/kaggle/working/dreams/imagine/imagine_000000/',

'/kaggle/working/dreams/imagine4K_00')Prompt 2:

#Generate the video Architecture Prompts 2

video_path = pipeline.walk(

prompts=['environment living room interior, mid century modern, indoor garden with fountain, retro,m vintage, designer furniture made of wood and plastic, concrete table, wood walls, indoor potted tree, large window, outdoor forest landscape, beautiful sunset, cinematic, concept art, sunstainable architecture, octane render, utopia, ethereal, cinematic light, –ar 16:9 –stylize 45000',

'environment living room interior, mid century modern, indoor garden with fountain, retro,m vintage, designer furniture made of wood and plastic, concrete table, wood walls, indoor potted tree, large window, outdoor forest landscape, beautiful sunset, cinematic, concept art, sunstainable architecture, octane render, utopia, ethereal, cinematic light, –ar 16:9 –stylize 45000',

'environment living room interior, mid century modern, indoor garden with fountain, retro,m vintage, designer furniture made of wood and plastic, concrete table, wood walls, indoor potted tree, large window, outdoor forest landscape, beautiful sunset, cinematic, concept art, sunstainable architecture, octane render, utopia, ethereal, cinematic light, –ar 16:9 –stylize 45000',

'environment living room interior, mid century modern, indoor garden with fountain, retro,m vintage, designer furniture made of wood and plastic, concrete table, wood walls, indoor potted tree, large window, outdoor forest landscape, beautiful sunset, cinematic, concept art, sunstainable architecture, octane render, utopia, ethereal, cinematic light, –ar 16:9 –stylize 45000',

'environment living room interior, mid century modern, indoor garden with fountain, retro,m vintage, designer furniture made of wood and plastic, concrete table, wood walls, indoor potted tree, large window, outdoor forest landscape, beautiful sunset, cinematic, concept art, sunstainable architecture, octane render, utopia, ethereal, cinematic light, –ar 16:9 –stylize 45000'],

seeds=[42,333,444,555],

num_interpolation_steps=50,

#height=1280, # use multiples of 64 if > 512. Multiples of 8 if < 512.

#width=720, # use multiples of 64 if > 512. Multiples of 8 if < 512.

output_dir='dreams', # Where images/videos will be saved

name='imagine', # Subdirectory of output_dir where images/videos will be saved

guidance_scale=8.5, # Higher adheres to prompt more, lower lets model take the wheel

num_inference_steps=50, # Number of diffusion steps per image generated. 50 is good default

)#upScale 4X

from stable_diffusion_videos import RealESRGANModel

model = RealESRGANModel.from_pretrained('nateraw/real-esrgan')

model.upsample_imagefolder('/kaggle/working/dreams/imagine/imagine_000000/', '/kaggle/working/dreams/imagine4K_00')

Prompt 3: Grand City

#Generate the video # GRAND CITY PROMPT

video_path = pipeline.walk(

prompts=['A grand city in the year 2100, atmospheric, hyper realistic, 8k, epic composition, cinematic, octane render, artstation landscape vista photography by Carr Clifton & Galen Rowell, 16K resolution, Landscape veduta photo by Dustin Lefevre & tdraw, 8k resolution, detailed landscape painting by Ivan Shishkin, DeviantArt, Flickr, rendered in Enscape, Miyazaki, Nausicaa Ghibli, Breath of The Wild, 4k detailed post processing, artstation, rendering by octane, unreal engine —ar 16:9',

'A grand city in the year 2100, atmospheric, hyper realistic, 8k, epic composition, cinematic, octane render, artstation landscape vista photography by Carr Clifton & Galen Rowell, 16K resolution, Landscape veduta photo by Dustin Lefevre & tdraw, 8k resolution, detailed landscape painting by Ivan Shishkin, DeviantArt, Flickr, rendered in Enscape, Miyazaki, Nausicaa Ghibli, Breath of The Wild, 4k detailed post processing, artstation, rendering by octane, unreal engine —ar 16:9',

'A grand city in the year 2100, atmospheric, hyper realistic, 8k, epic composition, cinematic, octane render, artstation landscape vista photography by Carr Clifton & Galen Rowell, 16K resolution, Landscape veduta photo by Dustin Lefevre & tdraw, 8k resolution, detailed landscape painting by Ivan Shishkin, DeviantArt, Flickr, rendered in Enscape, Miyazaki, Nausicaa Ghibli, Breath of The Wild, 4k detailed post processing, artstation, rendering by octane, unreal engine —ar 16:9',

'A grand city in the year 2100, atmospheric, hyper realistic, 8k, epic composition, cinematic, octane render, artstation landscape vista photography by Carr Clifton & Galen Rowell, 16K resolution, Landscape veduta photo by Dustin Lefevre & tdraw, 8k resolution, detailed landscape painting by Ivan Shishkin, DeviantArt, Flickr, rendered in Enscape, Miyazaki, Nausicaa Ghibli, Breath of The Wild, 4k detailed post processing, artstation, rendering by octane, unreal engine —ar 16:9'],

seeds=[42,444,555,666],

num_interpolation_steps=50,

#height=1280, # use multiples of 64 if > 512. Multiples of 8 if < 512.

#width=720, # use multiples of 64 if > 512. Multiples of 8 if < 512.

output_dir='dreams', # Where images/videos will be saved

name='imagine', # Subdirectory of output_dir where images/videos will be saved

guidance_scale=8.5, # Higher adheres to prompt more, lower lets model take the wheel

num_inference_steps=50, # Number of diffusion steps per image generated. 50 is good default

)#upScale 4X

from stable_diffusion_videos import RealESRGANModel

model = RealESRGANModel.from_pretrained('nateraw/real-esrgan')

model.upsample_imagefolder('/kaggle/working/dreams/imagine/imagine_000002/',

'/kaggle/working/dreams/imagine4K_02')Making Music Videos New! Music can be added to the video by providing a path to an audio file. The audio will inform the rate of interpolation so the videos move to the beat.

%%capture

! pip install youtube-dl

! youtube-dl -f bestaudio --extract-audio --audio-format mp3 --audio-quality 0 -o "music/thoughts.%(ext)s" https://soundcloud.com/nateraw/thoughts

from IPython.display import Audio

Audio(filename='music/thoughts.mp3')# Seconds in the song.

audio_offsets = [7, 9]

fps = 8

# Convert seconds to frames

num_interpolation_steps = [(b-a) * fps for a, b in zip(audio_offsets, audio_offsets[1:])]

video_path = pipeline.walk(

prompts=['blueberry spaghetti', 'strawberry spaghetti'],

seeds=[42, 1337],

num_interpolation_steps=num_interpolation_steps,

height=512, # use multiples of 64

width=512, # use multiples of 64

audio_filepath='music/thoughts.mp3', # Use your own file

audio_start_sec=audio_offsets[0], # Start second of the provided audio

fps=fps, # important to set yourself based on the num_interpolation_steps you defined

batch_size=4, # increase until you go out of memory.

output_dir='dreams', # Where images will be saved

name=None, # Subdir of output dir. will be timestamp by default

)Output of the above code:

Kaggle Implementation: https://www.kaggle.com/rupakroy/stable-diffusion-videos

Useful Link: https://prompthero.com/

i hope you liked it, likewise I will try to bring as much new innovation from Ai realm and across.

Thanks again, for your time, if you enjoyed this short article there are tons of topics in advanced analytics, data science, and machine learning available in my medium repo. https://medium.com/@bobrupakroy

Some of my alternative internet presences are Facebook, Instagram, Udemy, Blogger, Issuu, Slideshare, Scribd, and more.

Also available on Quora @ https://www.quora.com/profile/Rupak-Bob-Roy

Let me know if you need anything. Talk Soon.

Comments

Post a Comment