Condensed Nearest Neighbor Rule Undersampling (CNN) ~ An alternative to oversampling techniques like SMOTE

Hi there, it’s an absolute novelty to be back with some new techniques from the data science realm.

Before we get started, likewise we will keep the article short and non-boring. Thus ending up with a happy state of mind.

In my previous article, we have seen ways to perform oversampling by using various methods like SMOTE and its cousins. Just in curiosity what if we have to do it another way around! ~ undersampling, So this is what we will gonna learn and perform in this article.

Condensed Nearest Neighbor Rule ~Undersampling

a.k.a. CNN for short seeks for a subset of a collection of samples that results in no loss in model performance, also referred to as a minimum consistent set.

It is achieved by enumerating the dataset and adding them to the ‘group’ only if they cannot be classified correctly by the current contents. This approach was introduced to counter the limitations of the high memory requirements of the K-Nearest Neighbors(KNN) algorithm.

When CNN is applied the group is compromised of all examples in the minority set and only examples from the majority set that cannot be classified correctly are added incrementally to the group.

Now let’s see how can we perform the Condensed Nearest Neighbor Rule.

from matplotlib import pyplot

from numpy import where

from collections import Counter

from sklearn.datasets import make_classification

from imblearn.under_sampling import CondensedNearestNeighbour

#define dataset

X, y = make_classification(n_samples=10000, n_features=2, n_redundant=0,n_clusters_per_class=1, weights=[0.99], flip_y=0, random_state=2)

#summarize class distribution

counter = Counter(y)

print(counter)

#define the undersampling method

undersample = CondensedNearestNeighbour(n_neighbors=1)

#transform the dataset

X, y = undersample.fit_resample(X, y)

#summarize the new class distribution after CNN

counter = Counter(y)

print(counter)

#scatter plot of examples by class label

for label, _ in counter.items():

row_ix = where(y == label)[0]

pyplot.scatter(X[row_ix, 0], X[row_ix, 1], label=str(label))

pyplot.legend()

pyplot.show()

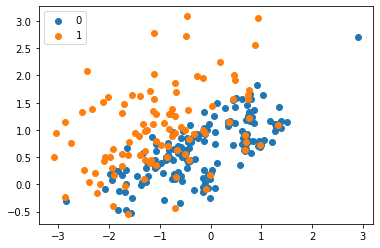

Have a look at the counter Before and After results you will get the difference.

Counter({0: 9900, 1: 100})

Counter({0: 188, 1: 100})

Criticism for the Condensed Nearest Neighbor Rule is that examples are selected randomly especially initially which results in retention of unnecessary samples. Thus to address the issue the CNN is modified to Tomek Links- under-sampling techniques.

from matplotlib import pyplot

from numpy import where

from collections import Counter

from sklearn.datasets import make_classification

from imblearn.under_sampling import TomekLinks

#define dataset

X, y = make_classification(n_samples=10000, n_features=2, n_redundant=0,

n_clusters_per_class=1, weights=[0.99], flip_y=0, random_state=1)

#summarize class distribution

counter = Counter(y)

print(counter)

#define the undersampling method

undersample = TomekLinks()

#transform the dataset

X, y = undersample.fit_resample(X, y)

#summarize the new class distribution after TomekLinks

counter = Counter(y)

print(counter)

#scatter plot of examples by class label

for label, _ in counter.items():

row_ix = where(y == label)[0]

pyplot.scatter(X[row_ix, 0], X[row_ix, 1], label=str(label))

pyplot.legend()

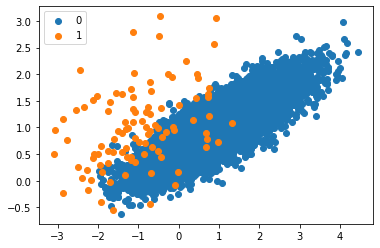

Counter({0: 9900, 1: 100})

Counter({0: 9874, 1: 100})

We can clearly see the difference as Tomek can be used to remove borderline and noisy instances while CNN removes redundant instances.

Here we are at the end of the story…..!

The Next article will be Edited Nearest Neighbors Rule (ENN) for undersampling.

I hope you enjoyed it, If you wish to learn more in detail you can google the CNN — undersampling techniques or you may visit the machine learning mastery site.

Likewise, I will try my best to bring more new ways of Data Science.

If you wish to explore more about new ways of doing data science follow my other articles.

Some of my alternative internet presences Facebook, Instagram, Udemy, Blogger, Issuu, and more.

Also available on Quora @ https://www.quora.com/profile/Rupak-Bob-Royy

Comments

Post a Comment