Common Interview Prep. Qs* A refreshing walkthrough of what we learned in the past

Common Interview Prep. Qs

A refreshing walkthrough of what we learned in the past

Hi hey there, thanks for the continuous support for my previous articles. Today we will continue from our previous article “Data Science Interview Q’s — V”, the commonly asked essential questions by the interviewers to understand the root level knowledge of DS rather than going for fancy advanced questions.

Logistic Regression:

1.1. Is Logistic Regression a linear or nonlinear classifier?

1.2. Is the decision boundary Linear or Non-linear in the case of a Logistic Regression model?

1.3. Does Logistic regression have a closed-form solution for maximizing log-likelihood?

1.4. Does Logistic regression in its vanilla form have the ability to handle outliers?

1.5. Can Logistic Regression be used for multi-class classification?

1.6. Is Logistic regression a generative model like the Naive Bayes model?

2.1. What are 3 assumptions of logistic regression in terms of features and targets?

2.2. How is the derivative of the sigmoid function related to itself?

2.3. What is the softmax function?

2.4. What are some hyper-parameters we can optimize while training a logistic regression model?

2.5. Mention any 2 techniques you can use to solve for collinearity in your feature space?

2.6 Regression coefficients (w) in logistic regression are estimated using which technique?

3.1. How do we handle categorical variables in Logistic Regression?

3.2. How do we solve the multiclass classification problems using Logistic Regression?

3.3. What are some advantages of Batch-gradient descent over stochastic gradient descent (SGD) and vice-versa <generic ML question> ?

3.4. Given a trained logistic regression model with N features, what is the run-time and space complexity to predict for a single point?

3.5. While predicting using a trained model, how do you interpret the class probabilities predicted by the model for a test sample?

3.6. What are some properties of the sigmoid function that makes it a good candidate to use in the logistic regression?

3.7 How can you use deviance to measure the goodness of fit for a logistic regression model?

— — — — — — — — — — — — — — — — — — — — — — — — — —

Linear Regression:

1.1. Should you use linear or logistic regression to predict the price of a house?

1.2. Can Lasso Regularization (L1) can be used for variable selection in Linear Regression?

1.3. Would you prefer a linear regression model that has higher or lower residuals?

1.4. Is linear regression prone to outliers?

1.5. What is the closed-form solution to find weight vector W, given a feature matrix X and label column vector y ?

2.1. What are 3 assumptions of linear regression?

2.2. What is Heteroscedasticity?

2.3. In linear regression, what is the value of the sum of the residuals for a given dataset?

2.4. What are 2 common techniques that are adopted to find the parameters of the linear regression line which best fits the model?

2.5. The best fit line using OLS passes through which point if MSE is used as the cost/loss function? What point does it pass when MAE is used instead?

3.1. Compare advantages of ordinary least squares (OLS) and stochastic gradient descent (SGD) to find parameters of linear regression?

3.2. What tests can be used to determine whether a linear association exists between the dependent and independent variables in a linear regression model?

3.3. What is the interpretation of R square when evaluating linear regression?

3.4. What are the downfalls of using too many or too few variables?

3.5. What do you understand by q-q plot in linear regression?

— — — — — — — — — — — — — — — — — — — — — — — — —

K-Means

1.1. Is K-Nearest neighbor a supervised or unsupervised learning algorithm

1.2. Is K-means a supervised or unsupervised learning algorithm

1.3. k stands for a number of clusters in K-Means?

1.4. Does centroid initialization affect K means Algorithm?

1.5. Does k-means work if clusters are of different sizes and variances?

1.6. Is k-means sensitive to outliers?

2.1. Why do you prefer Euclidean distance over Manhattan distance in the K means Algorithm?

2.2. What is Big O complexity for training a K-means model (Lyods algorithm example) and the same for predicting for incoming point?

2.3. What parameters does a trained k-means model contain?

2.4. Mention 3 drawbacks of the k-means algorithm?

2.5. How does the mean-shift clustering algorithm overcome issues of the k-means?

3.1. What is Lloyd’s algorithm for Clustering?

3.2. What are some techniques to decide k as a parameter in k-Means?

3.3. What are some strategies for early stopping criteria in k-means?

3.4. How does the silhouette score evaluate a k-means model?

3.5. What is the k-means ++ algorithm?

3.6. How do we increase the training speed of the k-means algorithm (Lloyd)?

3.7. What is the Fuzzy k-means algorithm?

— — — — — — — — — — — — — — — — — — — — — —

Random Forest

1.1 Is scaling of features required for random forest classifiers? (why?)

1.2 What is the range of the Gini score?

1.3 Is random forest robust to outliers?

1.4 Is a random forest a non-linear algorithm?

1.5 Is it a boosting algorithm?

2.1 What are some crucial parameters to tune in the Random forest apart from the depth of the tree and the number of trees/estimators?

2.2 Why are random forests more easily parallelizable as compared to boosting algorithms?

2.3 What are pruning of trees in the random forest? What are its advantages?

2.4 What is the subtle difference (yet important) between the bagging of trees and random forests?

2.5 What is the recommended size of a subset of N features while splitting a node? (mention both for classification and regression)?

2.6 What hyperparameters can be leveraged to reduce the correlation between trees?

3.1 Explain how the CART algorithm work and its advantages?

3.2 How to select the best split in decision trees using Gini Impurity?

3.3 What strategies does Random Forest employs to reduce variance in predictions while maintaining the low variance that was characteristic of a lone Decision Tree?

3.4. Why is a Random forest algorithm biased toward selecting features with many categories?

3.5 Why would you limit the depth of trees?

Thanks again, for your time, if you enjoyed this short article there are tons of topics in advanced analytics, data science, and machine learning available in my medium repo. https://medium.com/@bobrupakroy

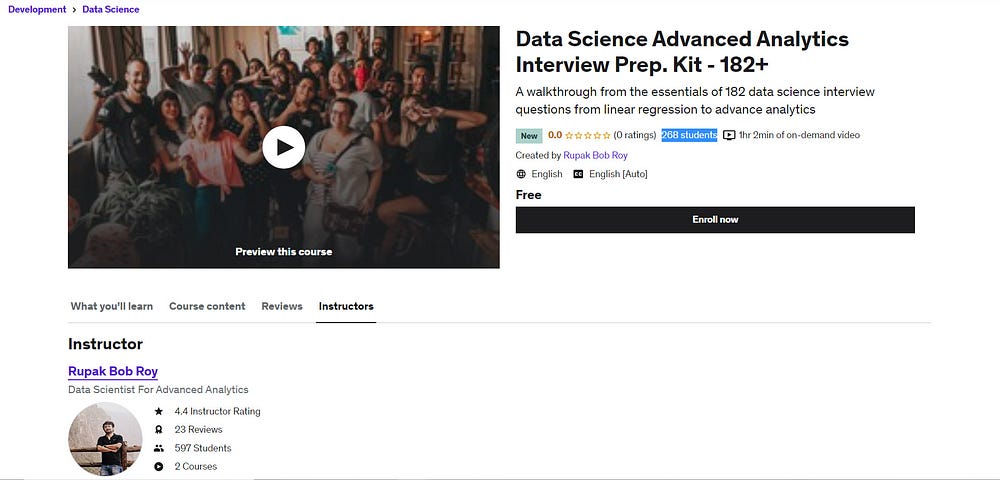

Some of my alternative internet presences Facebook, Instagram, Udemy, Blogger, Issuu, Slideshare, Scribd and more.

Also available on Quora @ https://www.quora.com/profile/Rupak-Bob-Roy

Let me know if you need anything. Talk Soon.

Comments

Post a Comment