AUTOXGBOOST + Optuna: An auto version of one of the most powerful machine learning XGBOOST

Hi everyone. WhatsUp! all good? today we will go through a new way to automate the XGBoost via AutoAGB.

We will start with our regular XGBOOST and will carry forward to the advancement of AutoXGB. alright?

First load all the data

# Importing the libraries

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

# Importing the dataset

dataset = pd.read_csv('credit_data.csv', sep=",")

#drop the missing values

dataset = dataset.dropna()

X = dataset.iloc[:,1:4].values

y = dataset.iloc[:, 4].values

#------------------------------------------------------

#Splitting the dataset into the Training set and Test set

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.25, random_state = 0)

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.transform(X_test)

Regular XGBoost

#---XGBOOST------------------

# Fitting XGBoost to the Training set

from xgboost import XGBClassifier

classifier = XGBClassifier()

classifier.fit(X_train, y_train)

# Predicting the Test set results

y_pred_xg = classifier.predict(X_test)

# Making the Confusion Matrix

from sklearn.metrics import confusion_matrix

cm = confusion_matrix(y_test, y_pred_xg)

#evaluation Metrics

from sklearn import metrics

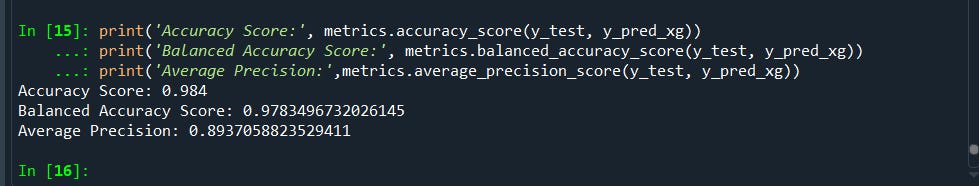

print('Accuracy Score:', metrics.accuracy_score(y_test, y_pred_xg))

print('Balanced Accuracy Score:', metrics.balanced_accuracy_score(y_test, y_pred_xg))

print('Average Precision:',metrics.average_precision_score(y_test, y_pred_xg))

Now let’s perform RandmizedSearchCV an alternative to GridSearchCV (gridsearchcv uses brute force, thus takes a lot of processing power and time)

# Set parameter grid

xgb_params = {'max_depth': [3, 5, 6, 8, 9, 10, 11],

'learning_rate': [0.01, 0.1, 0.2, 0.3, 0.5],

'subsample': np.arange(0.4, 1.0, 0.1),

'colsample_bytree': np.arange(0.3, 1.0, 0.1),

'colsample_bylevel': np.arange(0.3, 1.0, 0.1),

'n_estimators': np.arange(100, 600, 100),

'gamma': np.arange(0, 0.7, 0.1)}

from sklearn.model_selection import RandomizedSearchCV

# Create RandomizedSearchCV instance

xgb_grid = RandomizedSearchCV(estimator=XGBClassifier(objective='binary:logistic',

tree_method="gpu_hist", # Use GPU

random_state=42,

eval_metric='aucpr'), # AUC under PR curve

param_distributions=xgb_params,

cv=5,

verbose=2,

n_iter=60,

scoring='average_precision')

# Run XGBoost grid search

xgb_grid.fit(X_train, y_train)

#to get the optimal setting for xgboost

xgb_grid.best_params_

xgb_grid.best_score_

The best parameters: xgb_grid.best_params_

{'subsample': 0.4,

'n_estimators': 500,

'max_depth': 3,

'learning_rate': 0.1,

'gamma': 0.4,

'colsample_bytree': 0.8000000000000003,

'colsample_bylevel': 0.7000000000000002}and its score: 0.9934761095555403

Now fit the model with the best parameters

#fit the model with the best estimator

classifier1 = XGBClassifier(subsample= 0.4,n_estimators= 500,

max_depth=3,learning_rate=0.1,gamma=0.4,colsample_bytree= 0.8000000000000003,

colsample_bylevel=0.7000000000000002)

classifier1.fit(X_train,y_train)

# Predicting the Test set results

y_pred_xg1 = classifier1.predict(X_test)

# Making the Confusion Matrix

from sklearn.metrics import confusion_matrix

cm = confusion_matrix(y_test, y_pred_xg1)

#evaluation Metrics

from sklearn import metrics

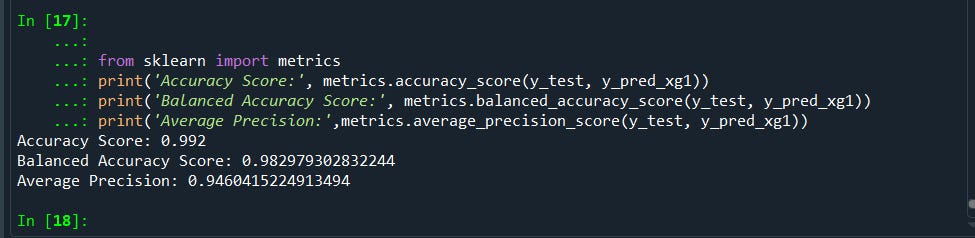

print('Accuracy Score:', metrics.accuracy_score(y_test, y_pred_xg1))

print('Balanced Accuracy Score:', metrics.balanced_accuracy_score(y_test, y_pred_xg1))

print('Average Precision:',metrics.average_precision_score(y_test, y_pred_xg1))

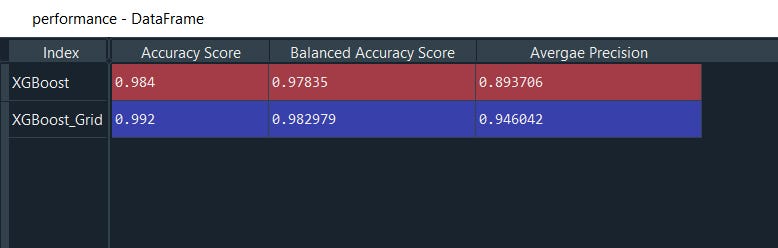

Combining the scores of with and without RandomizedSearchCV

Now let’s perform AUTOXGBoost

#AutoXGBOOST

#Current version there is dependency bug so, install autoxgb without #dependencies

pip install --no-deps autoxgboost

#pip install autoxgboost

Now set the optimal parameters

from autoxgb import AutoXGB

# Define required parameters

train_filename = "credit_data.csv" # Path to training dataset

#train_filename = X_train

output = r"......autoXBG\output_autoxgb" # Name of output folder

# Set optional parameters

# path to test data. if specified, the model will be evaluated on the test data

# and test_predictions.csv will be saved to the output folder

# if not specified, only OOF predictions will be saved

# test_filename = "test.csv"

test_filename = None

task = "classification"

targets = ["default"]

use_gpu = True

num_folds = 5

seed = 42

num_trials = 200

time_limit = 400

fast = False

features = None

categorical_features = None

The link to more parameter definitions from the developer and also can you use CLI to run and execute the script: https://github.com/abhishekkrthakur/autoxgb

Now we will train the model

# Start AutoXGB training

axgb = AutoXGB(

train_filename=train_filename,

output=output,

test_filename=test_filename,

task=task,

targets=targets,

use_gpu=use_gpu,

num_folds=num_folds,

seed=seed,

num_trials=num_trials,

time_limit=time_limit,

fast=fast,

features=features,

categorical_features=categorical_features,

)

axgb.train()

That's it!

The reasons why AutoXGB performs better as follows:

- Use of Bayesian optimization with Optuna for hyperparameter tuning, which is faster than RandomizedSearchCV or GridSearchCV as it uses information from previous iterations to find the best hyperparameters in fewer iterations.

- Thus with Optuna provides better accuracy with speed

- Documented Optimized memory usage which consumes 8x less memory.

and the drawbacks

- we lose significant control over parameter tunning

- another observable issue is the lack of control over the evaluation metrics.

Next, we will look into the rush for adopting the OPTUNA the best alternatives to Grid Search Hyperparameter tunning technique.

If you find this article useful…. do browse my other techniques like Bagging Classifier, Voting Classifier, Stacking, and more I guarantee you will like them too. See you soon with another interesting topic.

Some of my alternative internet presences Facebook, Instagram, Udemy, Blogger, Issuu, and more.

Also available on Quora @ https://www.quora.com/profile/Rupak-Bob-Roy

Have a good day, Talk soon.

Comments

Post a Comment